Google's New Robots.txt Sitemap

Via the Google Blog, more stats and analysis of robots.txt files were released in Google's Sitemaps feature.

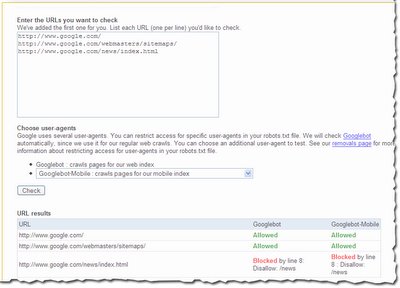

If your site has a robots.txt file, the new robots.txt tab provides Googlebot's view of that file, including when the spider last accessed it, the status it returns (404 error if it does not exist), and if it blocks access to your home page. It also shows any syntax errors in the file.

You can enter a list of URLs to see if the robots.txt file allows or blocks them.

You can also test changes to your robots.txt file by entering and then testing them against the Googlebot user-agent, other Google user agents, or the Robots Standard. This lets Google Sitemap users experiment with changes to see how they would impact the crawl of your site, as well as make sure there are no errors in the file, before making changes to the file on your site. If you don't have a robots.txt file, you can use this page to test a potential robots.txt file before you add it to your site.

More stats

Crawl stats now include the page on your site that had the highest PageRank, by month, for the last three months.

Page analysis now includes a list of the most common words in your site's content and in external links to your site. This gives you additional information about why your site might come up for particular search queries.

Subscribe to Our Newsletter!

Latest in Marketing